Cause#

This is an introduction to website optimization.

soapffz has written over 40 articles:

When introducing my website to others, they always ask, "How much traffic do you have?"

soapffz can only answer, "Because I haven't submitted to Baidu, so Baidu cannot find it."

Therefore, this article will explain why I haven't submitted to Baidu and provide a solution.

It is divided into two parts: sitemap generation and Baidu submission.

Sitemap Generation#

Initial Pitfalls#

After building the website, there was an "index.php" in the links of each article.

To remove this "index.php" and change the links to the following format:

https://soapffz.com/sec/247.html

https://soapffz.com/python/245.html

https://soapffz.com/tools/239.html

https://soapffz.com/sec/233.html

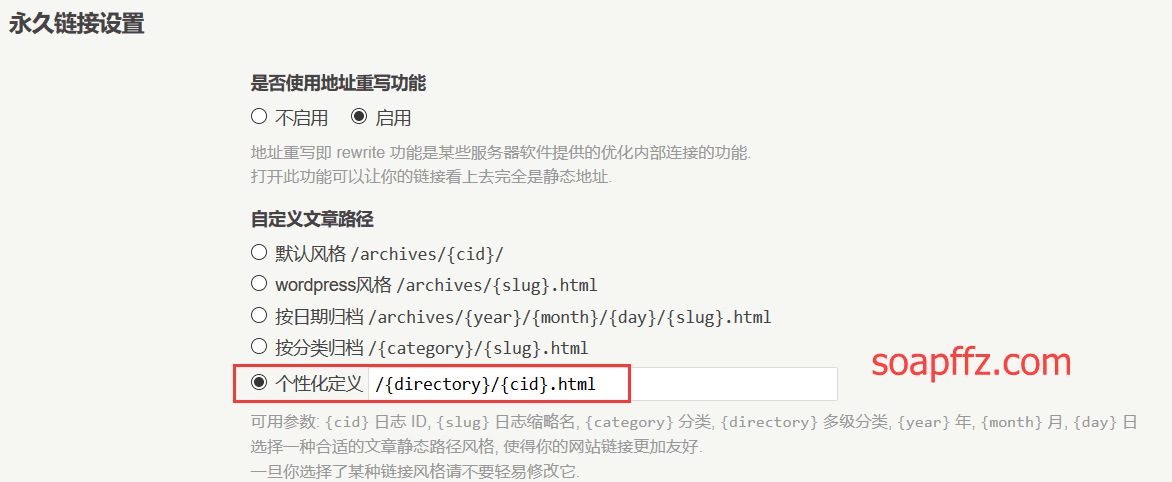

I made the following changes in the backend:

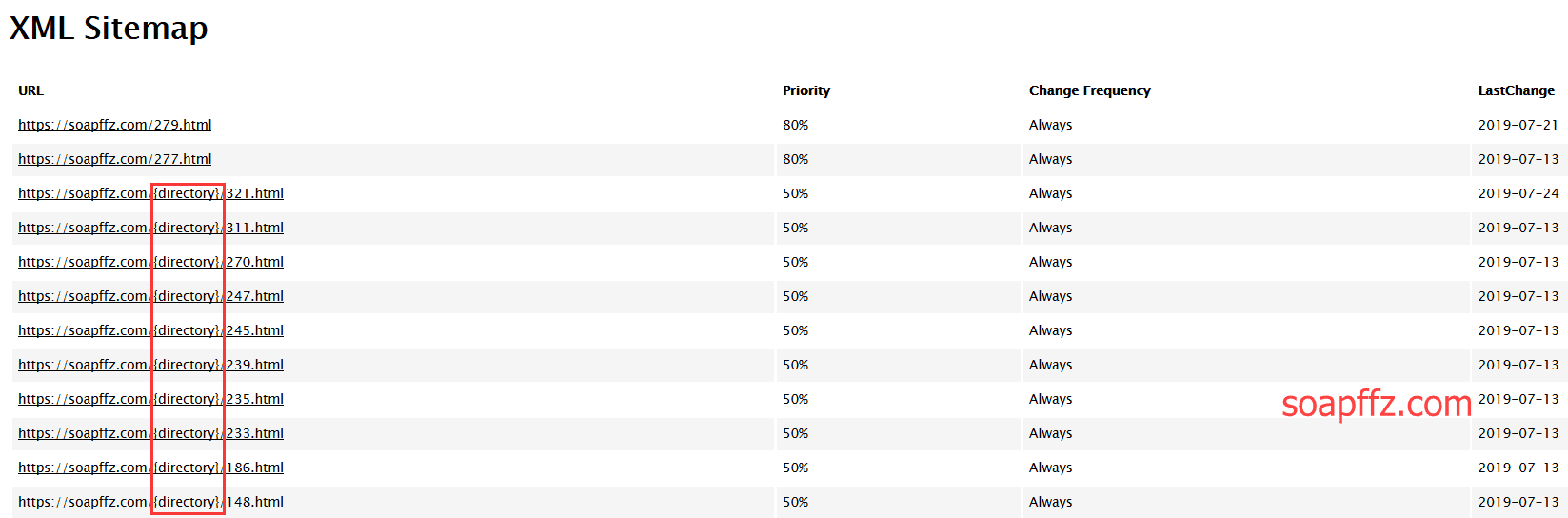

This caused an issue when using some sitemap generation plugins:

Troubleshooting#

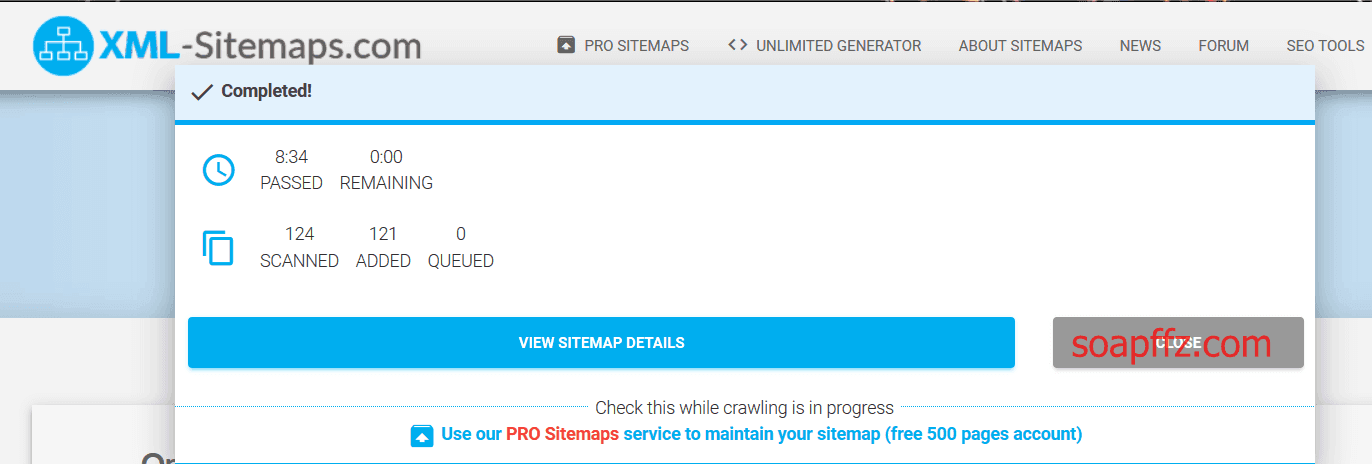

In addition to using sitemap generation plugins, I also tried online websites, such as this one.

As you can see, it took eight and a half minutes to crawl 124 links. Click "View Sitemap Details" to see the details.

Click the button shown in "Other Downloads" to download files in all formats:

You can see that the crawled links are normal, but there is a problem.

Generating a sitemap in this way is too cumbersome. I also tried using Python to crawl all the URLs on the website, but it seemed unnecessary for just a URL format issue.

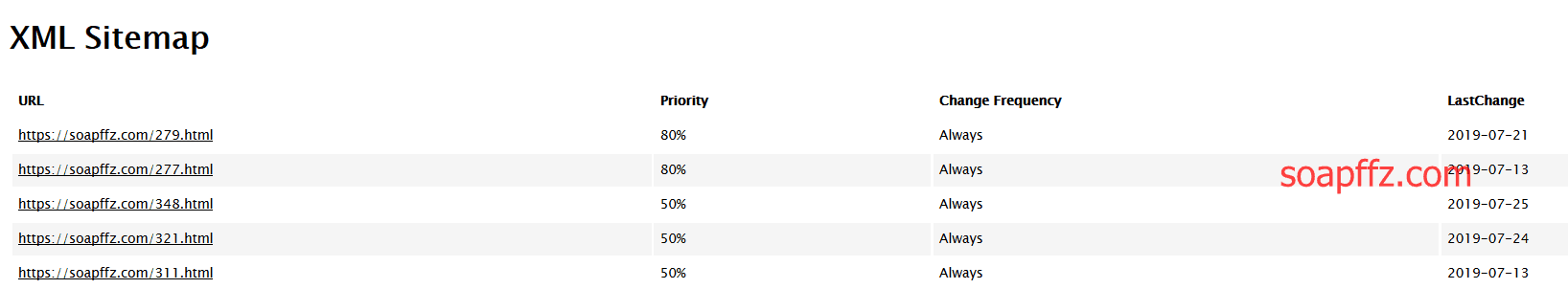

Final Solution#

Therefore, I decided to abandon including directory fields in the URLs:

Then, I used the plugin introduced earlier by Bayunjiang to generate the sitemap:

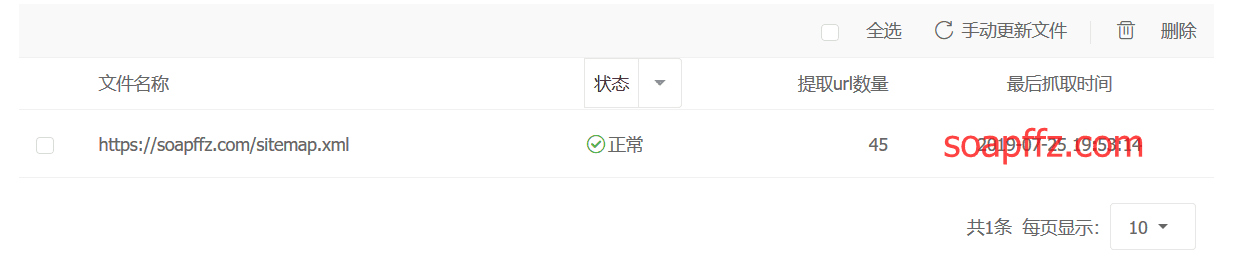

Then, I tried to submit it to Baidu:

You can see that the link submission was successful.

Active Push to Baidu#

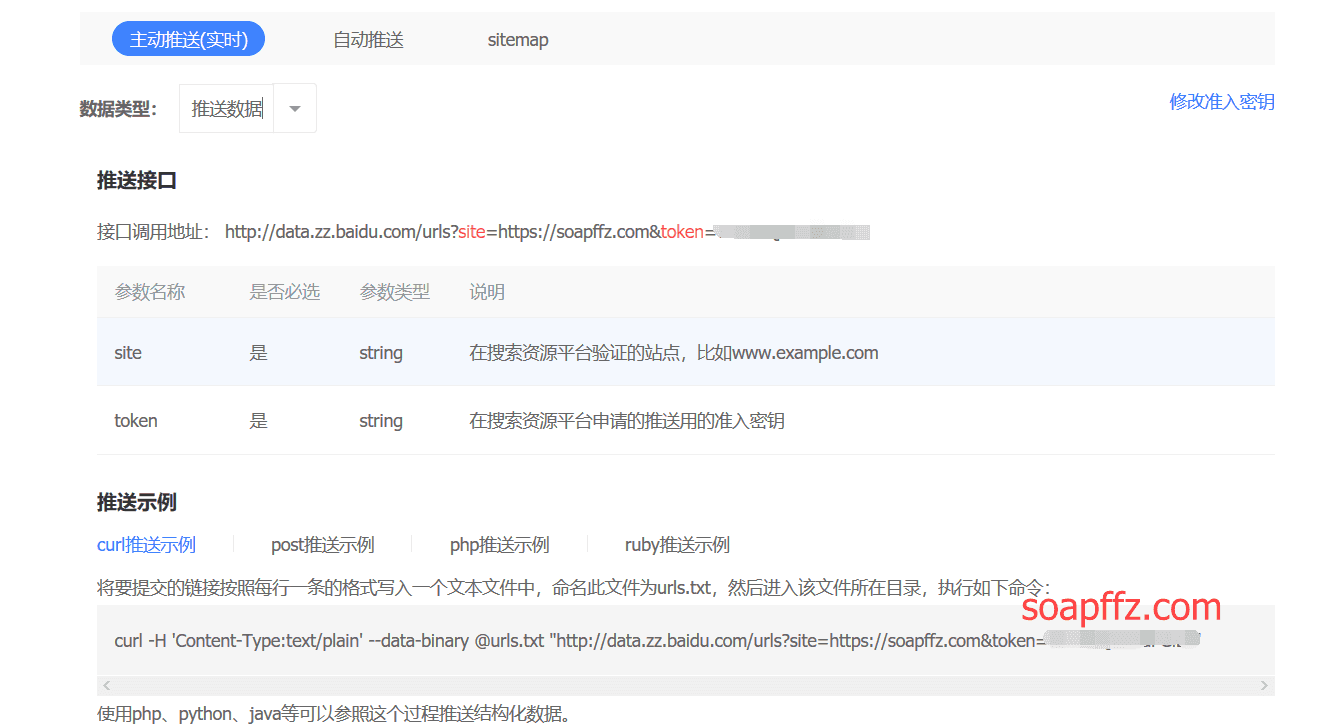

Generating the sitemap is not enough. We can use active push in combination with the sitemap to make Baidu index the website faster.

Baidu's active push method:

Basically, it means sending the "url.txt" with your own token to the Baidu resource platform.

I wrote a script in Python based on articles I found online.

The logic is to parse the website's current sitemap.xml and get the URLs with your own token and website name.

Use the "post" method of the "requests" library to submit it and check the return status.

The complete code is as follows:

# -*- coding: utf-8 -*-

'''

@author: soapffz

@fucntion: Crawl URLs from the website's sitemap.xml and actively push them to Baidu Webmaster Platform

@time: 2019-07-25

'''

import requests

import xmltodict

class BaiduLinkSubmit(object):

def __init__(self, site_domain, sitemap_url, baidu_token):

self.site_domain = site_domain

self.sitemap_url = sitemap_url

self.baidu_token = baidu_token

self.urls_l = [] # Store the URLs to be crawled

self.parse_sitemap()

def parse_sitemap(self):

# Parse the website's sitemap.xml to get all URLs

try:

data = xmltodict.parse(requests.get(self.sitemap_url).text)

self.urls_l = [t["loc"] for t in data["urlset"]["url"]]

except Exception as e:

print("Error parsing sitemap.xml:", e)

return

self.push()

def push(self):

url = "http://data.zz.baidu.com/urls?site={}&token={}".format(

self.site_domain, self.baidu_token)

headers = {"Content-Type": "text/plain"}

r = requests.post(url, headers=headers, data="\n".join(self.urls_l))

data = r.json()

print("Successfully pushed {} URLs to Baidu Search Resource Platform".format(data.get("success", 0)))

print("Remaining push quota for today:", data.get('remain', 0))

not_same_site = data.get('not_same_site', [])

not_valid = data.get("no_valid", [])

if len(not_same_site) > 0:

print("There are {} URLs that were not processed because they are not from this site:".format(len(not_same_site)))

for t in not_same_site:

print(t)

if len(not_valid) > 0:

print("There are {} invalid URLs:".format(len(not_valid)))

for t in not_valid:

print(t)

if __name__ == "__main__":

site_domain = "https://soapffz.com" # Fill in your website here

sitemap_url = "https://soapffz.com/sitemap.xml" # Fill in the complete link to your sitemap.xml here

baidu_token = "" # Fill in your Baidu token here

BaiduLinkSubmit(site_domain, sitemap_url, baidu_token)

I tested it three times: one for normal situations, one with the sitemap plugin turned off, and one with a randomly written sitemap.

The results are as follows:

MIP & AMP Web Acceleration#

Baidu Webmaster Platform introduces it as follows:

- MIP (Mobile Instant Page) is an open technical standard for mobile web pages. It provides MIP-HTML specifications, MIP-JS runtime environment, and MIP-Cache page caching system to achieve mobile web acceleration.

- AMP (Accelerated Mobile Pages) is an open-source project by Google. It is a lightweight web page that loads quickly on mobile devices, aiming to make web pages load quickly and look beautiful on mobile devices. Baidu currently supports AMP submission.

In short, it is an acceleration for mobile devices, which is definitely beneficial for SEO.

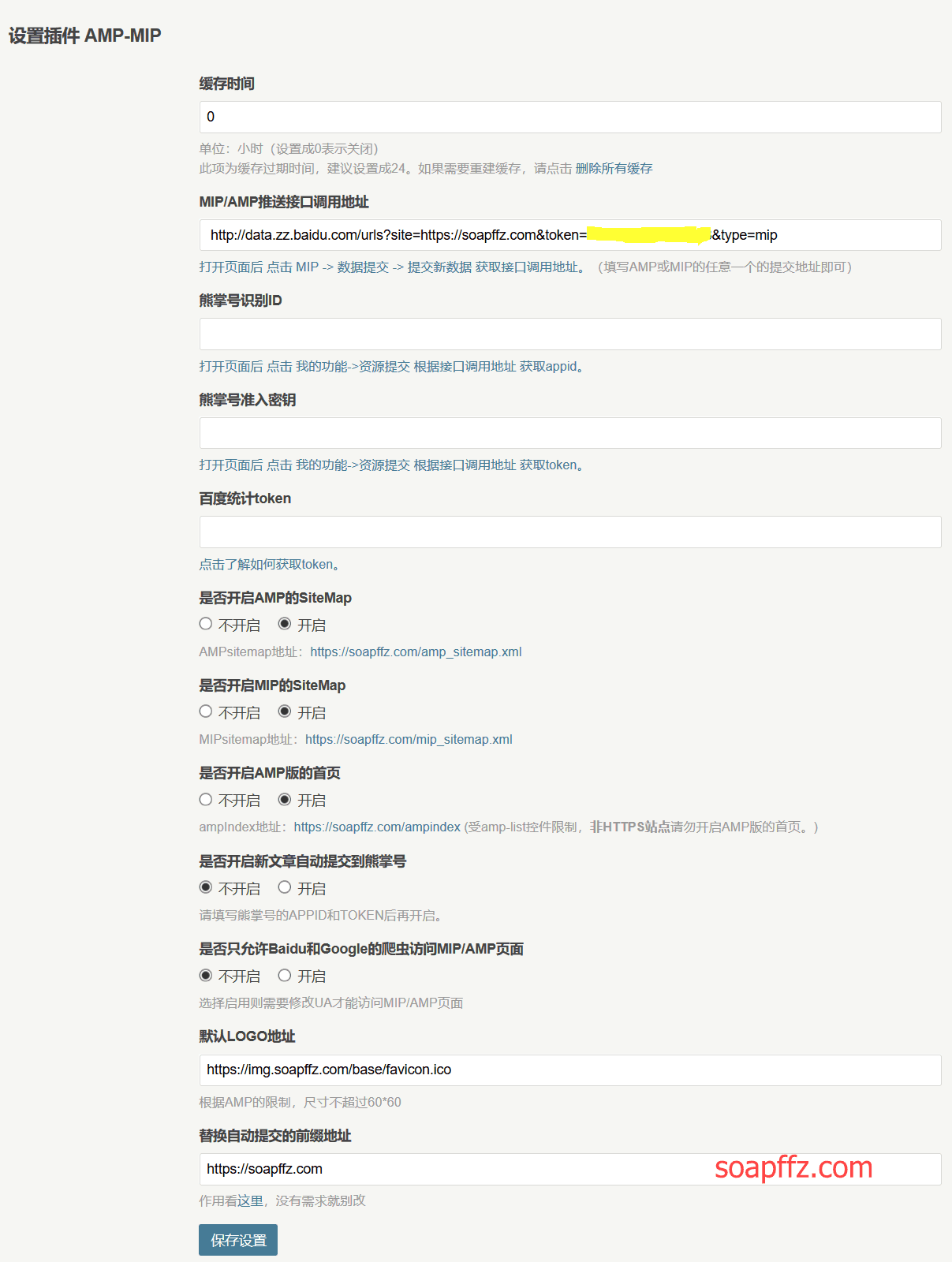

The plugin used here is the tool Typecho-AMP by Holmesian.

The backend interface of the plugin is shown in the image:

You can see that it also supports Baidu Baijiahao, but I don't need it here (smiley face). So, I just need to set the interface.

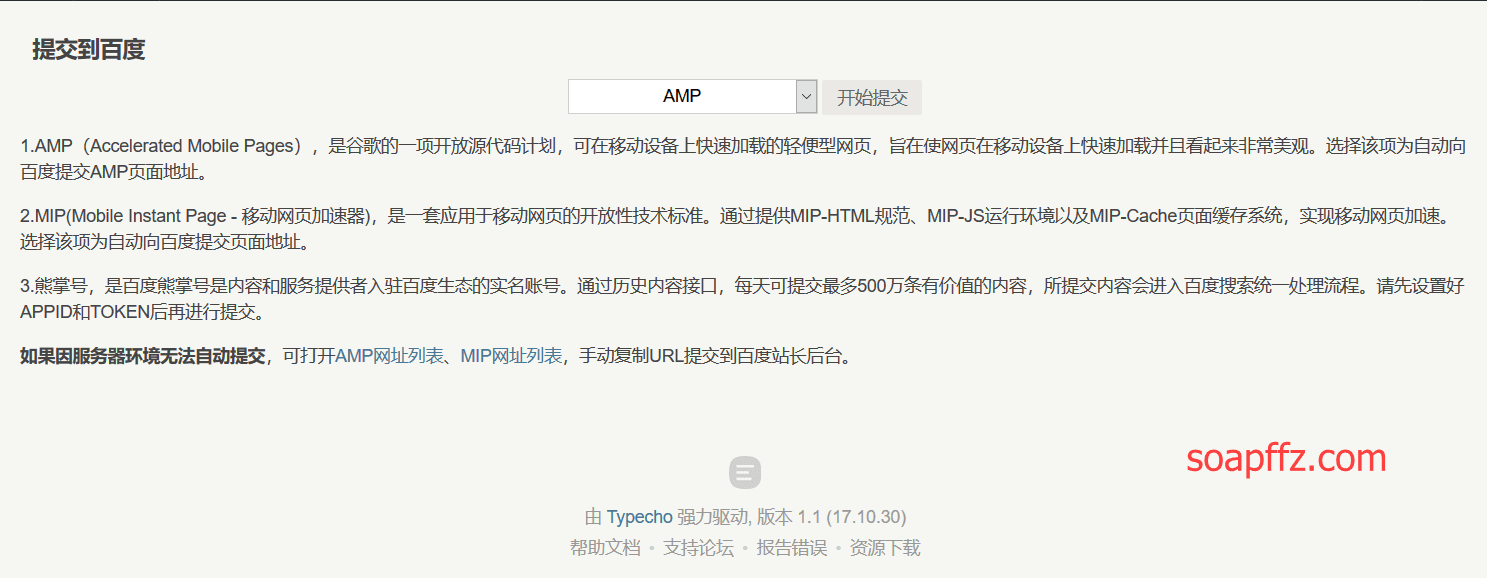

Then, in the dropdown menu of the control panel, there is a button to push "AMP/MIP" to Baidu:

Clicking on it shows the following:

Try to push it:

I found that it couldn't be pushed. After troubleshooting for a while, I realized that the active push rules were interesting.

The only difference from the basic link submission is adding "mip/amp" at the end of the link, and the returned parameters become "success_mip/success_amp", etc.

So, I modified the above code to push the URLs and the URLs with "mip/amp" together: